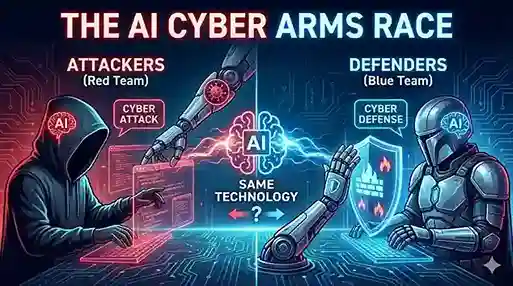

The cybersecurity landscape has reached a critical inflection point where the same artificial intelligence technologies powering enterprise innovation are simultaneously enabling unprecedented cyberattacks. In early 2025, threat intelligence revealed that Chinese state-sponsored actors used Claude Code to automate 80-90% of an intrusion attempt, handling reconnaissance, exploit generation, and lateral movement preparation with minimal human oversight. This incident marks a paradigm shift: we have entered an era where both sides of the cybersecurity battle wield identical AI weapons, creating an asymmetric arms race that threatens to overwhelm traditional defense mechanisms.

The stakes extend far beyond theoretical scenarios. In 2024, businesses lost an unprecedented $16.6 billion to cybercrime, representing a 33% year-over-year increase according to the FBI’s Internet Crime Report. The velocity, sophistication, and scale of AI-enhanced attacks are fundamentally transforming the threat landscape while simultaneously exposing critical vulnerabilities in how organizations approach security. For Chief Information Security Officers and security professionals, understanding this dual-use technology paradigm is no longer optional but essential to organizational survival.

Energy Utilities Under Siege: The Tripling Threat

The International Energy Agency’s 2025 report delivered a stark warning: cyberattacks on energy utilities have tripled in the past four years and have become more sophisticated because of artificial intelligence. This escalation represents one of the most concerning developments in critical infrastructure security, with implications extending far beyond individual organizations to national security and economic stability.

The Scale of the Utility Sector Crisis

The numbers paint an alarming picture of accelerating threat activity. U.S. utilities faced 1,162 cyberattacks in 2024, representing a nearly 70% jump compared to 689 attacks during the same period in 2023. By the third quarter of 2024, the situation had deteriorated further, with utilities experiencing a staggering 234% year-over-year increase in attacks, averaging 1,339 weekly incidents according to Check Point Research.

Europe saw cyberattacks in the power sector double between 2020 and 2022, with 48 successful attacks hitting Europe’s energy infrastructure in 2022 alone. The worldwide average cost of a data breach in the energy sector reached $4.72 million in 2022, setting a new record high. Ransomware attacks targeting the energy and utilities sectors increased by 80% in 2024 compared to the previous year, according to TrustWave’s January 2025 report.

Why Energy Infrastructure Presents Such Attractive Targets

Critical infrastructure, including gas, water, and particularly power utilities, are favored targets for malicious cyber activity for several compelling reasons. The electricity sector powers everything from homes and businesses to hospitals and national defense systems, making disruptions a severe risk with widespread economic and social consequences.

The energy transition toward renewable sources has inadvertently expanded the attack surface. Global renewable power capacity increased by 473 gigawatts in 2023, up 14% on the previous year according to the International Renewable Energy Agency. The IEA anticipates 5,500 gigawatts to become operational by 2030. This rapid expansion requires digital technologies like IoT, artificial intelligence, and middleware to integrate and manage renewables, creating more potential attack surfaces across dispersed and relatively new systems.

Specific Vulnerabilities in Modern Energy Systems

Old infrastructure and legacy systems present one of the biggest challenges. Many operational technology systems were built decades ago and were not designed with cyber threats in mind. They often lack updates, patches, and support, and older software and hardware do not always work with new security solutions. Upgrading them without disrupting operations is a complex task.

The convergence of IT and OT networks has eliminated the air gap that previously protected operational systems. The push for real-time data, remote monitoring, and automation has connected these systems to IT networks, making operations more efficient but giving cybercriminals new ways to exploit weaknesses that were once isolated.

The rise of decentralized assets like electric vehicles, heat pumps, and solar panels connecting to the grid at low voltage levels adds complexity, creating potential security gaps. A recent study demonstrated that a targeted attack on personal electric vehicles and fast-charging stations using publicly available data could cause significant disruptions to local power supply.

Forescout’s Vedere Labs published groundbreaking research in March 2025 revealing severe systemic security weaknesses in solar power infrastructure. The SUN:DOWN research discovered 46 new vulnerabilities across three of the world’s top 10 solar inverter vendors. More alarmingly, 80% of all vulnerabilities disclosed in solar power systems over the past three years were classified as high or critical severity, with CVSS scores ranging from 9.8 to 10.

Real-World Impact: Case Studies

In May 2025, a Southeast Asian energy provider was hit by ransomware threats from the NightSpire group. The threat actors disabled control systems for 18 days while demanding an $8 million ransom, a stark example of how ransomware threats and data theft can target industrial control systems.

The most significant energy-sector ransomware attack of 2024 was RansomHub’s breach of Halliburton in August. The attack cost Halliburton $35 million in losses after the breach caused the company to shut down IT systems and disconnect customers.

Water infrastructure has proven equally vulnerable. In October 2024, American Water, the largest regulated water utility in the United States serving more than 14 million people across 14 states, detected a cyberattack that forced the company to disconnect customer portals and pause billing systems. The Municipal Water Authority of Aliquippa in Pittsburgh had to shut down operational technology systems after the Iran-backed group “Cyber Av3ngers” compromised one of its booster stations. Federal investigators discovered that at least 10 additional water facilities throughout the United States were breached using the same methodology.

The AI Multiplier Effect

Artificial intelligence has become both a critical defense tool for energy companies and a force multiplier for attackers. According to a Boston Consulting Group survey, almost three-quarters of the energy and utilities industry is currently utilizing generative AI for cybersecurity operations. However, there is a 42% shortfall in cybersecurity personnel in the sector, creating a dangerous skills gap that AI adoption attempts to address.

The North American Electric Reliability Corporation has warned that susceptible points on the electrical grid grow by approximately 60 per day as connectivity expands. Some Chinese-made solar inverters were found to have built-in communication equipment that is not fully explained, raising fears that covert malware may have been installed in critical energy infrastructure that could be triggered remotely to shut down inverters and cause widespread power disruptions.

AI-Enhanced Phishing and Social Engineering: The Human Vulnerability Amplified

While technical vulnerabilities capture headlines, the most effective attack vector remains the oldest: human psychology. Artificial intelligence has transformed social engineering from a labor-intensive craft into an industrialized operation with professional infrastructure and advanced expertise in organizational psychology.

The Staggering Scale of AI-Enhanced Social Engineering

The FBI’s 2024 Internet Crime Report logged 859,532 complaints and $16.6 billion in losses, a 33% increase from 2023. Phishing and spoofing were the most common types of online crime, at 193,407 incidents. Cyber-enabled fraud accounted for 83% of total losses in 2024, representing approximately $13.7 billion across 333,981 complaints.

Business Email Compromise attacks alone accounted for $2.77 billion in reported losses according to the FBI’s IC3 Annual Report. The global average cost of a data breach hit an all-time high of $4.88 million in 2024. Breaches that start with phishing attacks are even more expensive, averaging $4.91 million according to IBM’s X-Force 2025 Cost of a Data Breach Report.

The AI Transformation of Phishing Campaigns

According to CybelAngel’s External Threat Intelligence report, 67.4% of all phishing attacks in 2024 utilized some form of artificial intelligence. AI tools like ChatGPT are used to zero in on the main concerns of employees and turn those pain points into convincing phishing emails free of grammatical mistakes.

One cybersecurity researcher found that it only took five prompts to instruct ChatGPT to generate phishing emails for specific industry sectors. The median time for a user to fall for a phishing email is less than 60 seconds, meaning a compromise can happen before an automated security system or SOC analyst has time to react.

Research from Hoxhunt revealed a critical milestone: in March 2025, AI-powered phishing campaigns became more effective than elite human red teams for the first time. AI was 24% more effective than human-crafted attacks, representing a 55% improvement in AI’s phishing performance relative to human red teams between 2023 and 2025.

The Evolution Beyond Traditional Phishing

Pretexting accounted for 50% of all social engineering attacks in 2024, almost twice the previous year’s proportion and marking the first time pretexting overtook traditional phishing as the most common social engineering method. Pretexting is now responsible for 27% of all social engineering-based breaches.

Voice-based attacks accelerated dramatically. Vishing attacks skyrocketed 442% between the first and second halves of 2024, often relying on AI-generated voice clones to impersonate executives. Smishing incidents increased 328% in 2024, affecting 76% of businesses.

Early 2025 saw a 1,450% spike in fake CAPTCHA attacks like ClickFix campaigns, with social engineering causing 39% of initial access incidents. The velocity and sophistication of these attacks have created an environment where 89% of social engineering attacks were financially motivated.

The Deepfake Dimension

In 2024, a multinational firm fell victim to a deepfake scam that cost the business $25 million. An employee was invited to a conference call with other senior staff members, including the CFO who authorized the payment for what the employee thought was a legitimate business reason. Every participant on that call was an AI-generated deepfake.

CybelAngel’s requests for takedowns of fake domains surged by 116% in 2024 compared to the previous year. File-sharing phishing volume more than tripled, increasing 350% over the year, with 60% exploiting legitimate domains such as Gmail, iCloud, Outlook, Dropbox, and DocuSign.

The Quantified Human Weakness

Human error, including social engineering, caused 68% of data breaches in 2024. Human involvement in breaches stood at 60% in 2025, with credential abuse causing 32% of breaches linked to human actions, followed by social tactics like phishing at 23%.

Between May 2024 and May 2025, 93% of social engineering intrusions were financially motivated. The average cost of a social engineering attack was $130,000 in 2024, while the average cost of a BEC attack reached $4.89 million. Almost half (48.2%) of tested people cannot recognize a real or deepfaked photo of a person, slightly lower than a 50-50 random guess.

Automated Vulnerability Discovery: The Speed Advantage

The most significant technical shift in the AI cyber arms race is the automation of vulnerability discovery, fundamentally altering the timeline attackers need to identify and exploit security weaknesses while simultaneously providing defenders with unprecedented scanning capabilities.

The Attacker’s Accelerated Timeline

Cloudflare’s 2024 report found that threat actors weaponized proof of concept exploits in attacks as quickly as 22 minutes after the exploits were made public. This represents a catastrophic compression of the window defenders have to apply patches and remediations.

AI enables attackers to run thousands of digital “war games” to determine the most effective exploitation paths with the highest likelihood of success. This elevates Advanced Persistent Threat campaigns from human-designed playbooks to machine-optimized strategies.

Microsoft’s 2024 Digital Defense Report highlights a measurable increase in AI-assisted APT reconnaissance and impersonation at scale. Proofpoint’s 2024 Threat Report identifies AI-enhanced phishing as one of the most scalable and dangerous emerging social engineering tactics. Cisco’s 2024 Security Report shows that 63% of organizations cannot detect or respond to AI-generated attacks quickly enough.

The Defender’s New Tools

At the same time, 2024 saw the first reports from researchers across academia and the tech industry using AI for vulnerability discovery in real-world code. Google’s Big Sleep, an AI agent developed by Google DeepMind and Google Project Zero, actively searches and finds unknown security vulnerabilities in software.

By November 2024, Big Sleep found its first real-world security vulnerability. Since then, Big Sleep has continued to discover multiple real-world vulnerabilities, exceeding expectations and accelerating AI-powered vulnerability research. Most recently, based on intelligence from Google Threat Intelligence, the Big Sleep agent discovered an SQLite vulnerability (CVE-2025-6965).

Big Sleep is being deployed to help improve the security of widely used open-source projects, a major win for ensuring faster, more effective security across the internet. These cybersecurity agents free up security teams to focus on high-complexity threats, dramatically scaling their impact and reach.

DARPA’s AI Cyber Challenge Results

The Defense Advanced Research Projects Agency’s AI Cyber Challenge sought to address a persistent bottleneck in cybersecurity: patching vulnerabilities before they are discovered or exploited by would-be attackers. The seven semifinalists were scored against their models’ ability to quickly, accurately, and successfully identify and generate patches for synthetic vulnerabilities across 54 million lines of code.

The models discovered 77% of the vulnerabilities presented in the final scoring round and patched 61% of those synthetic defects at an average speed of 45 minutes. This represents a fundamental shift in defensive capabilities, though it highlights that even cutting-edge AI systems cannot achieve perfect detection or remediation.

The Growing Vulnerability Pipeline

HackerOne’s 2025 data reveals a troubling trend: valid AI-related vulnerability reports have surged 210% year-over-year, while the number of security researchers focused on AI and machine learning assets has doubled. Teams are finding more vulnerabilities than they can fix, creating a widening gap between detection and defense.

As organizations race to embed AI across products, workflows, and customer experiences, their attack surface expands faster than ever. Every new model, API, and integration introduces potential exposure points that traditional cybersecurity workflows cannot manage.

The market for artificial intelligence in cybersecurity expanded from $23.12 billion in 2024 to $28.51 billion in 2025 and is projected to reach $136.18 billion by 2032, exhibiting a compound annual growth rate of 24.81%. This growth reflects the surge in advanced cyber threats and the critical role AI now plays in pre-emptive security and automated incident response.

AI-Powered Defense Systems: Leveling the Playing Field

While AI empowers attackers, it simultaneously provides defenders with capabilities that would be impossible to achieve through human effort alone. The challenge lies in deploying these defensive technologies effectively while addressing their inherent limitations.

Real-Time Threat Detection and Response

AI-driven threat detection systems can analyze massive datasets, identify anomalies in real-time, and provide predictive threat intelligence, creating capabilities to combat threats without requiring human intervention. These systems act instantly and autonomously, analyzing data patterns to combat threats and allowing networks to be more adaptable as automated defenses learn from every new incident to evolve in real time.

AI implementation has shown a 35% improvement in fraud detection rates. Machine learning enables faster containment, mitigation, and breach detection time. AI tools monitor attack trends, predict threats, and adapt defenses accordingly.

Behavioral analytics enable organizations to identify evolving threats and known vulnerabilities. Traditional security defenses rely on attack signatures and indicators of compromise to discover threats, but with thousands of new attacks that cybercriminals launch every year, this approach is not practical. AI models develop profiles of applications deployed on networks and process vast volumes of device and user data, analyzing incoming data against those profiles to prevent potentially malicious activity.

The Agentic SOC Revolution

In 2025, 90% of HackerOne customers reported using Hai, an agentic AI system that automates triage, filters duplicate reports, and highlights the most urgent risks for human review. This represents a fundamental shift in how Security Operations Centers function.

According to Darktrace’s 2025 Report, The State of AI Cybersecurity, 63% of security stakeholders believe their existing cybersecurity stack solely or mostly leverages generative AI, altering their workflows. AI can automatically trigger containment steps during an active attack, reducing triage time and giving analysts space to investigate and remediate properly.

Security teams are deploying AI agents to handle critical SOC functions. For an SOC, this means triaging alerts to end alert fatigue and autonomously blocking threats in seconds. These agents drastically cut response and processing times, enabling human teams to move from manual operators to commanders of the new AI workforce.

Advanced Authentication and Identity Protection

AI-powered identity and access management uses continuous behavioral risk scoring to detect anomalies that might indicate compromised credentials. These systems analyze communication patterns, login behaviors, transaction flows, and device trust levels, ensuring only legitimate users gain access.

Traditional verification methods fail to identify and protect organizations against AI-generated deepfakes. Strengthening authentication with advanced, adaptive security measures, such as behavior-based analysis and continuous identity verification, helps detect anomalies in real-time.

Voice biometrics creates a unique “voiceprint” for each user based on hundreds of distinct acoustic characteristics. By analyzing pitch, tone, cadence, and rhythm, it can distinguish a legitimate user from a synthetic voice clone. Modern systems incorporate anti-spoofing capabilities trained to detect subtle artifacts indicating an audio signal is being played from a speaker rather than spoken by a human vocal tract.

Limitations and Challenges

Despite these advances, AI-powered defense systems face significant challenges. While technology vendors often claim near-perfect accuracy rates in marketing materials, independent research reveals a significant performance drop in real-world scenarios. A 2024 academic paper showed that the performance of state-of-the-art open-source deepfake detectors can fall by as much as 50% when tested against new, in-the-wild deepfakes not found in their training data.

Organizations that handle video, audio, or imagery will eventually need systems that authenticate reality before it can be trusted. Tools matter, but culture matters more. Executives have to train people to slow down, verify, and build doubt into their decision-making. Doubt is not a weakness but a safety feature in the AI era.

The 2024 ISC2 Cybersecurity Workforce Study revealed that only 49% of respondents have implemented generative AI into their tools, despite widespread belief in its benefits. The disconnect between technology availability and implementation reflects organizational challenges in adopting and integrating AI-powered defenses.

The Talent Shortage in AI Security: The Critical Skills Gap

Perhaps the most concerning dimension of the AI cyber arms race is not technological but human. The cybersecurity industry faces an acute talent shortage that AI adoption has simultaneously highlighted and partially addressed, though not without creating new skills requirements.

The Scale of the Workforce Crisis

The 2024 ISC2 Cybersecurity Workforce Study revealed a deficit of more than 4.7 million cybersecurity professionals worldwide. The global cybersecurity workforce grew to 7.1 million, but another 2.8 million jobs remain unfilled. The gap between supply and demand is biggest in the Asia-Pacific region, accounting for more than half of the global shortage (56%).

Four industries account for close to two-thirds (64%) of the cybersecurity workforce shortage: financial services, materials and industrials, consumer goods, and technology. Seven out of ten attacks target those industries, and the cost per breach is among the highest.

In 2025, 59% of organizations have critical or significant skills shortages, up from 44% the previous year according to ISC2. Almost 60% of respondents agree that skills gaps have significantly impacted their ability to secure the organization, with 58% stating it puts their organizations at significant risk.

Economic Pressures Exacerbating the Gap

Budget cuts have surpassed talent scarcity as the top cause of the cybersecurity workforce shortage in 2025. In 2024, 25% of respondents reported layoffs in their cybersecurity departments, a 3% rise from 2023, while 37% faced budget cuts, a 7% rise from 2023.

These budget cuts have impacted the skills gap significantly. Two-thirds of respondents said not only have the budget cuts led to current staffing shortages, but they are expected to make closing the skills gap even more difficult in the next few years. The funding is not available for training, and those with skills in high demand are moving on to better-paying positions.

Organizations with significant skills gaps are almost twice as likely to suffer a material data breach. IBM’s 2024 report shows these breaches cost an average of $1.76 million more than at well-staffed companies. The impact of skills shortages is stark: 88% of respondents said they led to at least one significant cybersecurity incident, with 69% experiencing more than one.

The AI Skills Dilemma

Artificial intelligence skills have jumped into the top five list of security skills in 2024. However, there is a disconnect between the technical skills that hiring managers think are needed and what non-hiring managers want. Only 24% of hiring managers said AI and machine learning skills are needed right now, ranking last on the skills-need list. When non-hiring managers are asked about skills most in demand to advance careers, 37% said AI and ML, higher than every other listed skill but cloud security.

Top technical skills in demand include AI and ML security (41%), followed by cloud security (36%), risk assessment (29%), and application security (28%). However, 33.9% of tech professionals report a shortage of AI security skills, particularly around emerging vulnerabilities like prompt injection.

Non-Technical Skills Become Critical

With AI, many anticipate an uptick in the need for non-technical skills. Overall, strong communication skills were listed as the most in-demand skill set across all of cybersecurity, followed closely by strong problem-solving skills and teamwork and collaboration skills.

Hiring managers prioritize transferable skills that will complement AI adoption rather than technical skills alone. There is greater willingness to default to non-technical skills that are seen as more transferable as the technology evolves.

Seventy percent of respondents indicated that it is difficult to find candidates with technology-focused certifications. Respondents place such high value on certifications that 89% said they would pay for an employee to obtain a cybersecurity certification.

The Career Transformation

The traditional pathway into cybersecurity, starting as a Tier-1 SOC analyst monitoring alerts and escalating incidents, is rapidly disappearing. AI-driven breach and attack simulations run faster, more frequently, and with broader coverage, improving testing cycles and overall readiness.

Cybersecurity professionals appear more comfortable with AI as adoption increases, with most now viewing it in positive, career-enhancing terms. Some 69% of respondents said they are integrating, testing, or evaluating the technology, while 73% claimed AI will create more specialized cybersecurity skills.

Nearly half (48%) said they are working to gain generalized AI knowledge and skills, and over a third (35%) are educating themselves on potential vulnerabilities and exploits related to AI solutions. Two-thirds of respondents think that their expertise in cybersecurity will augment AI technology, though a third are concerned their jobs could be eliminated in an AI-focused world.

Predictions for 2026 Threat Landscape: What CISOs Must Prepare For

Leading cybersecurity organizations and experts have released their forecasts for 2026, revealing converging trends that paint a picture of accelerating AI-driven threats, expanding attack surfaces, and the urgent need for fundamentally different defensive strategies.

The AI Arms Race Intensifies

Google Cloud’s Cybersecurity Forecast 2026 predicts that threat actors will leverage AI to escalate the speed, scope, and effectiveness of their attacks. Simultaneously, defenders will harness AI agents to supercharge security operations and enhance analyst capabilities. This transformation introduces new challenges, including “Shadow Agent” risks and the need for evolving identity and access management.

Prompt injection represents a critical and growing threat. Expect a significant rise in targeted attacks on enterprise AI systems where attackers manipulate AI to bypass security protocols and follow hidden commands. AI-enabled social engineering will accelerate, including vishing with AI-driven voice cloning to create hyperrealistic impersonations of executives or IT staff, making attacks harder to detect and defend against.

Trend Micro’s Security Predictions for 2026 emphasize that the defining challenge will be learning to defend against intelligent, adaptive, and autonomous threats. From vibe coding to agentic AI, the same technologies that accelerate creativity and efficiency will expand the cybercriminal toolkit by introducing new attack vectors, automating attacks, exploiting AI ecosystems, and blurring the line between innovation and intrusion.

The Agentic AI Paradigm Shift

The widespread adoption of AI agents will create new security challenges requiring organizations to develop new methodologies and tools to effectively map their AI ecosystems. A key part of this will be the evolution of identity and access management to treat AI agents as distinct digital actors with their own managed identities.

While an autonomous agent is a tireless digital employee, it is also a potent insider threat. An agent is always on, never sleeps, never eats, but if improperly configured, it can access the keys to the kingdom with privileged access to critical APIs, data, and systems, and it is implicitly trusted.

Palo Alto Networks predicts a surge in AI agent attacks in 2026. Adversaries will no longer make humans their primary target but will look to compromise the agents. With a single well-crafted prompt injection or by exploiting a tool-misuse vulnerability, bad actors can co-opt an organization’s most powerful, trusted employee.

IBM’s predictions for 2026 center on how the integration of autonomous AI into enterprise environments can be both a boon and a burden. The agentic shift is no longer theoretical but underway. Autonomous AI agents are reshaping enterprise risk, and legacy security models will crack under the pressure.

Data Poisoning and Trust Erosion

A new frontier of attacks will be data poisoning: invisibly corrupting copious amounts of data used to train core AI models. Adversaries will manipulate training data at its source to create hidden backdoors and untrustworthy black box models. This shift marks a seismic evolution from data exfiltration.

The traditional perimeter is irrelevant when the attack is embedded in the very data used to create the enterprise’s core intelligence. This new threat exposes a critical, structural gap that is organizational, not necessarily technological. The people who understand the data (developers and data scientists) and the people who secure the infrastructure (the CISO’s team) operate in two separate worlds.

For leaders, this ignites a crisis of trust: if the data flowing through the cloud cannot be trusted, the AI built on that data cannot be trusted. Tools like data security posture management (DSPM) and AI security posture management (AI-SPM) will become non-negotiable cloud imperatives in 2026 as AI workloads and data volumes explode.

Ransomware Evolution

Ransomware will remain a defining threat but will evolve from a disruptive event into a systemic issue. Every enterprise dependency, from AI models and supply chains to APIs and even business relationships, will double as an attack surface. The future of ransomware is not just about encryption but also the exploitation of trust itself.

The year ahead will see agentic AI handle critical portions of the ransomware attack chain, such as reconnaissance, vulnerability scanning, and even ransom negotiations, all without human oversight. In mid-2025, a joint advisory revealed that the Play ransomware group has hit an estimated 900 organizations worldwide since 2022.

Modern extortion techniques will bypass multi-factor authentication through increasingly sophisticated methods. Automation, complex supply chains, and expanding digital ecosystems will enable attackers to find and exploit vulnerabilities faster than organizations can patch them.

Identity as the New Perimeter

By 2026, it will be broadly accepted that breaches are no longer about “getting in” through firewalls but about logging in. Cyber adversaries have learned that exploiting human trust, onboarding workflows, help desks, and identity recovery processes is far more reliable than exploiting software vulnerabilities.

Given the sensitivity of AI-driven data and agentic workflows, identity will need to be treated as critical national infrastructure. This shift will require specialized threat-hunting capabilities, AI-specific protections, and infrastructure-level security controls to defend against increasingly sophisticated external attacks.

Identity will no longer be just an access layer but a strategic security priority on par with networks and cloud. The defining theme of cybersecurity in 2026 will be trust, or rather, the lack of it.

SaaS and Cloud Targeting

Attackers have learned a simple lesson: compromising SaaS platforms can have big payouts. As a result, expect more targeting of commercial off-the-shelf SaaS providers, which are often highly trusted and deeply integrated into business environments.

In 2026, expect more breaches where attackers leverage valid credentials, APIs, or misconfigurations to bypass traditional defenses entirely. Some attacks may involve software with unfamiliar brand names, but their downstream impact will be significant.

Quantum Computing Threats

Adversaries are already implementing “harvest now, decrypt later” (HNDL) attacks, systematically collecting encrypted data with the intention of decrypting it once quantum computing becomes viable. This strategy is a clear threat, as attackers do not need current decryption capabilities but simply store encrypted communications, financial records, and sensitive data until quantum computers can break the encryption.

Organizations will need to adopt quantum-resistant defenses, including quantum-resistant tunneling, comprehensive crypto data libraries, and other technologies. As quantum computing continues to become more of a reality and potential threats loom, it will be essential to adopt these measures to keep pace with the rapidly evolving cyber landscape.

Geopolitical Cyber Warfare

Nation-state actors, including Russia’s Sandworm and China’s APT 41, will dominate global cybersecurity concerns in 2026, with tactics evolving in complexity. Ongoing geopolitical pressures on Russia and Iran could provoke threat actors in those countries to initiate disruptive or aggravating cyberattacks on their adversaries.

If they are unable to establish military dominance over their rivals, they may use cyberspace to make a point. Distributed-denial-of-service attacks, the spreading of disinformation, and other disruptive activity could be deployed as weapons of hybrid warfare.

The Consumer Perspective

Consumer surveys reveal deep anxiety about the AI threat landscape. More than four in five consumers are concerned about AI being used to create fake identities that are indistinguishable from real people. Over three-quarters (76%) believe that cybercrime will continue to increase and be impossible to slow down because of AI.

Sixty-nine percent do not believe their bank or retailer is adequately prepared to defend against AI-driven cyberattacks, or they are unsure. One in four millennial adults reported being a victim of identity theft in the past year, while nearly a quarter have fallen for a phishing attack at home or work in the past 12 months.

Strategic Imperatives for Security Leaders

The AI cyber arms race demands fundamental shifts in how organizations approach cybersecurity. Incremental improvements to existing programs will prove insufficient against adversaries leveraging automation, intelligence, and scale.

Embrace the AI Defense Multiplier

Organizations must move beyond experimentation to systematic deployment of AI-powered defensive capabilities. This includes real-time anomaly detection, automated threat response, behavioral analytics, and predictive threat intelligence. The goal is not to replace human analysts but to amplify their capabilities and free them to focus on strategic threats that require human judgment.

Security teams should implement AI-driven breach and attack simulations that run continuously, providing constant testing and improvement of defensive posture. These simulations should specifically include AI-enhanced attack scenarios to prepare teams for the threats they will actually face.

Secure the AI Estate

As AI agents proliferate across the enterprise, organizations must develop comprehensive strategies for securing these digital workers. This includes treating AI agents as distinct entities with their own identity and access management requirements, implementing robust governance frameworks for AI deployment, and establishing clear boundaries and permissions for autonomous systems.

Security teams must gain visibility into all AI systems operating within the organization, including shadow AI deployments by business units. Data security posture management and AI security posture management tools should be implemented to provide this visibility and identify risks.

Address the Skills Gap Proactively

Organizations cannot wait for the talent market to improve. Strategic responses include investing significantly in training and certification for existing staff, particularly in AI security skills; creating pathways for non-traditional candidates to enter cybersecurity roles; leveraging AI tools to augment smaller teams; and building partnerships with educational institutions to develop future talent pipelines.

Leaders should prioritize both technical and non-technical skills, recognizing that communication, critical thinking, and problem-solving abilities are equally important as technical expertise in the AI era.

Implement Zero Trust Architecture

The traditional perimeter-based security model is obsolete. Organizations must transition to zero trust architectures that assume breach and verify every access request, regardless of source. This is particularly critical for AI agents and autonomous systems that may operate across traditional security boundaries.

Identity becomes the new security perimeter, requiring continuous authentication and authorization based on real-time risk assessment rather than static credentials.

Prepare for Quantum Threats

Organizations should begin transitioning to post-quantum encryption standards now, before quantum computing becomes viable. This includes conducting inventories of cryptographic systems, implementing crypto-agile architectures that can quickly switch between encryption methods, and prioritizing protection of the most sensitive long-term data.

Build Resilience, Not Just Defense

The 2026 threat landscape requires shifting from prevention-focused security to resilience-focused security. Organizations must assume they will be breached and focus on minimizing damage, maintaining operations, and recovering quickly.

This includes implementing robust backup systems that are isolated from production networks, conducting regular recovery drills, establishing clear incident response protocols that account for AI-enhanced attacks, and building redundancy into critical systems.

Foster Security Culture

Technology alone cannot solve the AI security challenge. Organizations must cultivate a security-aware culture where every employee understands their role in the defensive posture. This includes regular training on AI-enhanced threats, particularly deepfake and social engineering scenarios; clear escalation procedures for suspicious activities; encouraging healthy skepticism and verification; and leadership modeling security-conscious behavior.

Conclusion: Navigating the Dual-Use AI Reality

The AI cyber arms race represents a fundamental transformation in how organizations must approach security. The same technologies that enable unprecedented defensive capabilities simultaneously empower attackers with automation, intelligence, and scale that overwhelm traditional security measures.

The statistics paint a sobering picture: cyberattacks on energy utilities tripling, 67.4% of phishing attacks utilizing AI, vulnerability discovery accelerating to 22 minutes from exploit publication, 4.8 million unfilled cybersecurity positions, and projected losses reaching $10.5 trillion annually by 2025.

Yet the situation is not hopeless. Organizations that recognize the dual-use nature of AI and adapt their strategies accordingly can achieve effective defense in this new era. This requires embracing AI-powered defensive technologies while remaining cognizant of their limitations, treating AI agents as distinct security entities requiring specialized protection, addressing the skills gap through training and non-traditional hiring, implementing zero trust architectures that assume breach, preparing for quantum computing threats, building organizational resilience rather than focusing solely on prevention, and fostering security-aware cultures where every employee understands their role.

For CISOs and security professionals, the imperative is clear: the organizations that adapt to the AI cyber arms race now will be positioned to thrive in the years ahead. Those that cling to traditional security models will find themselves increasingly vulnerable to adversaries wielding the same AI technologies but without the organizational constraints and compliance requirements that govern defensive uses.

The threat landscape of 2026 will be defined by intelligent, adaptive, and autonomous attacks. The defensive posture must evolve to match, leveraging the same AI capabilities while recognizing that technology alone is insufficient. Human expertise, organizational culture, strategic planning, and continuous adaptation remain essential components of effective cybersecurity.

The AI cyber arms race is not a future concern but a present reality. The time for action is now, before the gap between attacker and defender capabilities widens further. Success requires not just investment in technology but fundamental transformation in how organizations think about, resource, and execute cybersecurity in an age where both sides wield the same powerful tools.

Sources

- AI is set to drive surging electricity demand from data centres. International Energy Agency. https://www.iea.org/news/ai-is-set-to-drive-surging-electricity-demand-from-data-centres-while-offering-the-potential-to-transform-how-the-energy-sector-works

- Cyberattacks per week per energy organisation, 2020-2024. International Energy Agency. https://www.iea.org/data-and-statistics/charts/cyberattacks-per-week-per-energy-organisation-2020-2024

- Why the energy transition means more cyberattacks. Spectra by MHI. https://spectra.mhi.com/why-the-energy-transition-means-more-cyberattacks

- Cybersecurity: is the power system lagging behind? International Energy Agency. https://www.iea.org/commentaries/cybersecurity-is-the-power-system-lagging-behind

- Cyber resilience. International Energy Agency. https://www.iea.org/reports/power-systems-in-transition/cyber-resilience

- Cyber Threats Against Energy Sector Surge as Global Tensions Mount. Resecurity. https://www.resecurity.com/blog/article/cyber-threats-against-energy-sector-surge-global-tensions-mount

- Top Utilities Cyberattacks of 2025 and Their Impact. Asimily. https://asimily.com/blog/top-utilities-cyberattacks-of-2025/

- Cybersecurity in the power sector. Eurelectric. https://www.eurelectric.org/in-detail/cybersecurity-in-the-power-sector/

- The State of Utility Cybersecurity in 2025. Forescout. https://www.forescout.com/blog/the-state-of-utility-cybersecurity-in-2025/

- The energy sector has no time to wait for the next cyberattack. Help Net Security. https://www.helpnetsecurity.com/2025/08/26/energy-sector-cyber-risks/

- Hackers Use AI to Supercharge Social Engineering Attacks. PYMNTS.com. https://www.pymnts.com/news/artificial-intelligence/2025/hackers-use-ai-supercharge-social-engineering-attacks/

- Social Engineering Statistics 2025: The Human Hack. DeepStrike. https://deepstrike.io/blog/social-engineering-statistics-2025

- 100+ Latest Social Engineering Statistics: Costs, Trends, AI. Sprinto. https://sprinto.com/blog/social-engineering-statistics/

- 70 Social Engineering Statistics for 2025. Spacelift. https://spacelift.io/blog/social-engineering-statistics

- The Rise of AI-Powered Phishing 2025. CybelAngel. https://cybelangel.com/blog/rise-ai-phishing/

- Phishing Trends Report (Updated for 2025). Hoxhunt. https://hoxhunt.com/guide/phishing-trends-report

- AI-Powered Phishing Outperforms Elite Cybercriminals in 2025. Hoxhunt. https://hoxhunt.com/blog/ai-powered-phishing-vs-humans

- How AI and deepfakes are redefining social engineering threats. Help Net Security. https://www.helpnetsecurity.com/2025/01/07/phishing-trends-2024/

- AI-Enhanced Social Engineering Will Reshape the Cyber Threat Landscape. Lawfare. https://www.lawfaremedia.org/article/ai-enhanced-social-engineering-will-reshape-the-cyber-threat-landscape

- AI-Powered Social Engineering Attacks. CrowdStrike. https://www.crowdstrike.com/en-us/cybersecurity-101/social-engineering/ai-social-engineering/

- Artificial Intelligence in Cybersecurity Market Report 2025. Globe Newswire. https://www.globenewswire.com/news-release/2025/10/23/3171810/0/en/Artificial-Intelligence-in-Cybersecurity-Market-Report-2025-Revenues-Grew-by-5-4-Billion-Over-2024-2025-Rising-Cyber-Threats-and-Digital-Transformation-Drive-Growth.html

- AI and Cybersecurity: Predictions for 2025. Darktrace. https://www.darktrace.com/blog/ai-and-cybersecurity-predictions-for-2025

- A summer of security: empowering cyber defenders with AI. Google. https://blog.google/technology/safety-security/cybersecurity-updates-summer-2025/

- 2025 Forecast: AI to supercharge attacks, quantum threats grow. SC Media. https://www.scworld.com/feature/cybersecurity-threats-continue-to-evolve-in-2025-driven-by-ai

- 2025 AI Cybersecurity Threat Trends. TrustNet. https://trustnetinc.com/resources/the-rise-of-ai-driven-cyber-threats-in-2025/

- How AI and APTs Are Transforming Cyber Warfare in 2025. Zentara. https://zentara.co/blog/ai-and-apts-cyber-warfare-2025/

- DARPA’s AI Cyber Challenge reveals winning models. CyberScoop. https://cyberscoop.com/darpa-ai-cyber-challenge-winners-def-con-2025/

- Artificial Intelligence in Cybersecurity: The Future of Threat Defense. Fortinet. https://www.fortinet.com/resources/cyberglossary/artificial-intelligence-in-cybersecurity

- AI Security Findings Outpace Cybersecurity Remediation in 2025. HackerOne. https://www.hackerone.com/blog/ai-security-cybersecurity-remediation-gap

- AI-Powered Cyber Threats in 2025. Medium. https://medium.com/@seripallychetan/ai-powered-cyber-threats-in-2025-the-rise-of-autonomous-attack-agents-and-the-collapse-of-ce80a5f05afa

- ISC2 Cybersecurity Workforce Study: Shortage of AI skilled workers. IBM. https://www.ibm.com/think/insights/isc2-cybersecurity-workforce-study-shortage-ai-skilled-workers

- The cybersecurity industry has an urgent talent shortage. World Economic Forum. https://www.weforum.org/stories/2024/04/cybersecurity-industry-talent-shortage-new-report/

- Cybersecurity Skills Gap Statistics for 2025. DeepStrike. https://deepstrike.io/blog/cybersecurity-skills-gap

- Results of the 2024 ISC2 Cybersecurity Workforce Study. ISC2. https://www.isc2.org/Insights/2024/10/ISC2-2024-Cybersecurity-Workforce-Study

- Cybersecurity Has a Talent Shortage. Here’s How to Close the Gap. BCG. https://www.bcg.com/publications/2024/cybersecurity-talent-shortage-close-the-gap

- 2025 Cybersecurity Skills Gap Global Research Report. Fortinet. https://www.fortinet.com/content/dam/fortinet/assets/reports/2025-cybersecurity-skills-gap-report.pdf

- AI, cybersecurity drive IT investments and lead skill shortages for 2025. Network World. https://www.networkworld.com/article/3606683/ai-cybersecurity-drive-it-investments-and-lead-skill-shortages-for-2025.html

- The state of cybersecurity and IT talent shortages. Help Net Security. https://www.helpnetsecurity.com/2024/12/31/cybersecurity-skills-gap-trends-2024/

- Will AI Take Over Cyber Security? Careers and Tech Reviewed. StationX. https://www.stationx.net/will-ai-replace-cyber-security-jobs/

- Skills Shortages Trump Headcount as Critical Cyber Challenge. Infosecurity Magazine. https://www.infosecurity-magazine.com/news/skills-shortages-headcount-2025/

- AI takes center stage as the major threat to cybersecurity in 2026. Experian. https://www.experianplc.com/newsroom/press-releases/2025/ai-takes-center-stage-as-the-major-threat-to-cybersecurity-in-20

- Cybersecurity Forecast 2026. Google Cloud. https://cloud.google.com/security/resources/cybersecurity-forecast

- 6 Cybersecurity Predictions for the AI Economy in 2026. Harvard Business Review. https://hbr.org/sponsored/2025/12/6-cybersecurity-predictions-for-the-ai-economy-in-2026

- The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026. Trend Micro. https://www.trendmicro.com/vinfo/us/security/research-and-analysis/predictions/the-ai-fication-of-cyberthreats-trend-micro-security-predictions-for-2026

- Preparing for Threats to Come: Cybersecurity Forecast 2026. Google Cloud Blog. https://cloud.google.com/blog/topics/threat-intelligence/cybersecurity-forecast-2026

- AI Takes Center Stage as the Major Threat to Cybersecurity in 2026. Business Wire. https://www.businesswire.com/news/home/20251202474758/en/AI-Takes-Center-Stage-as-the-Major-Threat-to-Cybersecurity-in-2026

- Five Cybersecurity Predictions for 2026. SecurityWeek. https://www.securityweek.com/five-cybersecurity-predictions-for-2026-identity-ai-and-the-collapse-of-perimeter-thinking/

- Cybersecurity trends: IBM’s predictions for 2026. IBM. https://www.ibm.com/think/news/cybersecurity-trends-predictions-2026

- The Year Ahead: AI Cybersecurity Trends to Watch in 2026. Darktrace. https://www.darktrace.com/blog/the-year-ahead-ai-cybersecurity-trends-to-watch-in-2026

- Five Cyber Predictions for 2026. SECURITY.COM.https://www.security.com/feature-stories/five-cyber-predictions-2026