The artificial intelligence revolution is accelerating at a breathtaking pace, but beneath the headlines about ChatGPT, autonomous vehicles, and AI-generated art lies a fascinating economic transformation that’s fundamentally reshaping the technology landscape. The cost to train cutting-edge AI models—once a barrier accessible only to tech giants with billion-dollar budgets—is plummeting at rates that dwarf even Moore’s Law. This dramatic cost reduction is democratizing AI development, enabling startups and mid-sized companies to compete in ways previously unimaginable, and setting the stage for an explosion of AI applications across every sector of the economy.

Understanding the AI Training Cost Explosion—And Its Reversal

To appreciate why current cost reductions matter so profoundly, we must first understand the economic pressures that defined AI development until recently. Training state-of-the-art AI models has become one of the most resource-intensive computational endeavors in human history. According to Stanford’s 2025 AI Index Report, training GPT-4 required approximately seventy-eight million dollars in compute resources alone, while Google’s Gemini Ultra reached an estimated one hundred ninety-one million dollars. These staggering figures represent only computational costs, excluding substantial expenses for research and development personnel, infrastructure maintenance, data acquisition and cleaning, and operational overhead.

The fundamental economics of AI training revolve around several interconnected factors. At the hardware level, high-performance GPU instances currently range from two dollars to fifteen dollars per hour depending on the specific accelerator type, memory configuration, and cloud provider. NVIDIA H100 SXM instances, among the most powerful training hardware available, cost approximately $2.40 per hour on certain cloud platforms. However, training frontier models requires not hundreds but tens of thousands or even hundreds of thousands of GPU hours.

Consider the scale: a model using one hundred thousand H100 GPU hours at $2.40 per hour would incur two hundred forty thousand dollars in direct compute costs. Scale this to the 2.79 million GPU hours required for DeepSeek-V3, and costs quickly reach into the millions even before considering additional overhead from distributed training, data preprocessing, and experimentation cycles. The exponential growth in AI training expenses reflects converging factors including larger model architectures expanding from millions to trillions of parameters, expanded training datasets growing from gigabytes to petabytes, increased computational requirements measured in floating-point operations, and the scaling challenge where performance improvements require disproportionately more compute.

Yet amid this apparent cost escalation, a remarkable counter-trend has emerged that’s transforming AI economics more profoundly than most observers realize.

The Great Cost Reversal: Training Costs Improving 50X Faster Than Moore’s Law

The most striking development in AI economics is the rate at which training costs are declining. Research from ARK Investment Management reveals that AI training costs are dropping roughly tenfold every year—a rate approximately fifty times faster than Moore’s Law, which predicted a doubling of transistor density every two years. In 2017, training an image recognition network like ResNet-50 on a public cloud cost approximately one thousand dollars. By 2019, that cost had plummeted to roughly ten dollars. Projections suggested costs would reach approximately one dollar by 2020, and the trend has continued its dramatic downward trajectory through 2025.

This isn’t theoretical improvement—it’s measurable transformation happening across multiple AI benchmarks. Data from MLPerf, the industry-standard AI benchmark suite, demonstrates that training costs have declined while training times have accelerated across task categories including image recognition, object detection, natural language processing, and recommendation systems. Analysis from the American Enterprise Institute tracking AI training costs shows consistent logarithmic decline even as model capabilities have expanded exponentially.

Recent market dynamics reveal GPU hourly pricing declining by approximately fifteen percent, making experimentation more accessible to mid-market companies. Perhaps more remarkably, the 2025 price per FP32 FLOP (floating-point operations per second) is about twenty-six percent of the 2019 price, corresponding to roughly a seventy-four percent decrease overall in computational cost over six years.

The question becomes: what’s driving this extraordinary cost reduction when model sizes and computational requirements continue growing? The answer lies in a convergence of technological breakthroughs across hardware, algorithms, and system design that are fundamentally rewriting AI economics.

Hardware Revolution: The Chip Wars Driving Cost Reduction

At the foundation of AI cost improvements lies a fierce battle for supremacy in specialized AI hardware. This competition is driving innovation at unprecedented speeds while simultaneously pushing costs downward through improved performance per dollar.

NVIDIA’s Dominance and the Competitive Response

NVIDIA has maintained overwhelming dominance in AI training hardware, with its H100 and subsequent architectures powering the majority of frontier AI development. The company is expected to report forty-nine billion dollars in AI-related revenue in 2025, representing a thirty-nine percent increase from the previous year. NVIDIA’s 2025 Blackwell architecture offers twice faster transformer acceleration and improved sparsity handling compared to previous generations, setting new benchmarks for training speed and efficiency.

However, NVIDIA’s pricing power—with H100 chips costing tens of thousands of dollars each and GPU prices actually tripling over recent generations—has created both opportunity and urgency for competitors. The incredibly high cost of NVIDIA chips means cloud providers make lower profits renting out those chips than they could with their own custom silicon, creating powerful incentives for vertical integration.

AMD’s Challenge and Custom Silicon Revolution

AMD has emerged as NVIDIA’s most serious competitor in AI training hardware. The company is projected to grow its AI chip division to $5.6 billion in 2025, effectively doubling its data center footprint. AMD’s MI300X GPU features market-leading memory capacity with 192GB of HBM3 memory compared to NVIDIA H100’s 80GB, providing substantial advantages for memory-intensive workloads like training large language models.

The MI400 series, incorporating chiplet-based modularity allowing dynamic scaling across tasks, represents AMD’s 2025 response to NVIDIA’s latest offerings. According to recent benchmarks, AMD’s MI300 series achieves performance on par with or exceeding NVIDIA’s H100 for inferencing on 70-billion-parameter language models, though NVIDIA maintains advantages in certain training scenarios.

Beyond AMD, a revolution in custom silicon is fundamentally reshaping AI chip economics. Tech giants are designing specialized AI accelerators tailored to their specific workloads, capturing efficiency gains that generic GPUs cannot match. Google’s TPU v5p, introduced in 2025, shows thirty percent improvement in throughput and twenty-five percent lower energy consumption compared to its previous generation. Multi-slice matrix fusion optimizes large model training speeds by thirty-five percent.

Amazon’s Trainium2 chips power massive data center projects like Project Rainier, with hundreds of thousands of chips deployed for AI training. Meta’s MTIA (Meta Training and Inference Accelerator) family, based on TSMC 5nm technology, claims three times improved performance versus MTIA v1. Microsoft’s Maia 100, built on TSMC’s N5 process, targets high throughput for Azure workloads through hardware-software co-optimization.

According to JPMorgan analysis, custom chips designed by companies like Google, Amazon, Meta, and OpenAI will account for forty-five percent of the AI chip market by 2028, up from thirty-seven percent in 2024. This shift represents a fundamental reorganization of AI economics, as companies escape vendor lock-in and optimize specifically for their use cases.

Specialized Startups and Emerging Architectures

Beyond established tech giants, a wave of specialized AI chip startups is pushing innovation boundaries with novel architectures. Cerebras Systems builds wafer-scale processors that dwarf traditional chips in size and capability, targeting specific training workloads. Graphcore’s IPU-3 achieves fifty percent higher FLOPS-per-watt efficiency, leading in energy-scaled compute. Groq focuses on deterministic, low-latency inference. SambaNova offers full-stack AI platforms combining hardware and software.

The overall result of this intense competition is a dramatic improvement in price-performance. Chips optimized for Transformer architectures—the foundation of modern language models—are expected to comprise twenty percent of all AI compute hardware sold in 2025. In data center inference workloads, specialized ASICs (Application-Specific Integrated Circuits) are surpassing GPUs in efficiency, grabbing thirty-seven percent of total deployment share. Between 2020 and 2025, AI chip energy efficiency improved by an astonishing forty percent year over year, transforming the economics of AI computing by cutting operational costs and enabling more powerful applications within the same power constraints.

Algorithmic Breakthroughs: Doing More With Less

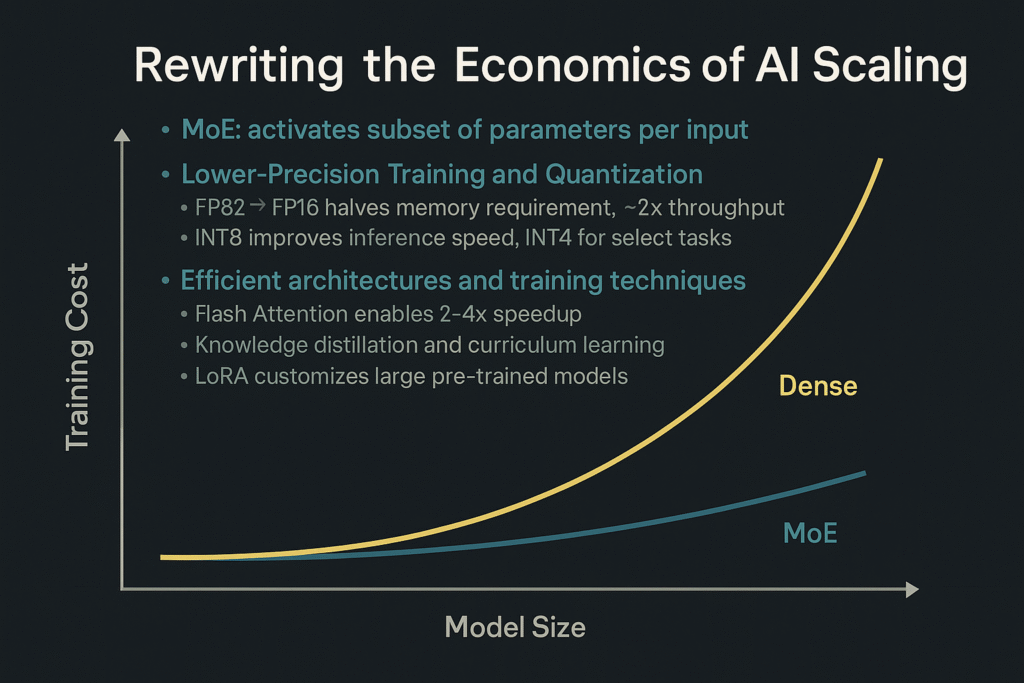

While hardware improvements capture headlines, algorithmic innovations are arguably even more transformative in reducing AI training costs. These breakthroughs enable models to achieve superior performance with dramatically fewer computational resources, fundamentally changing the cost equation.

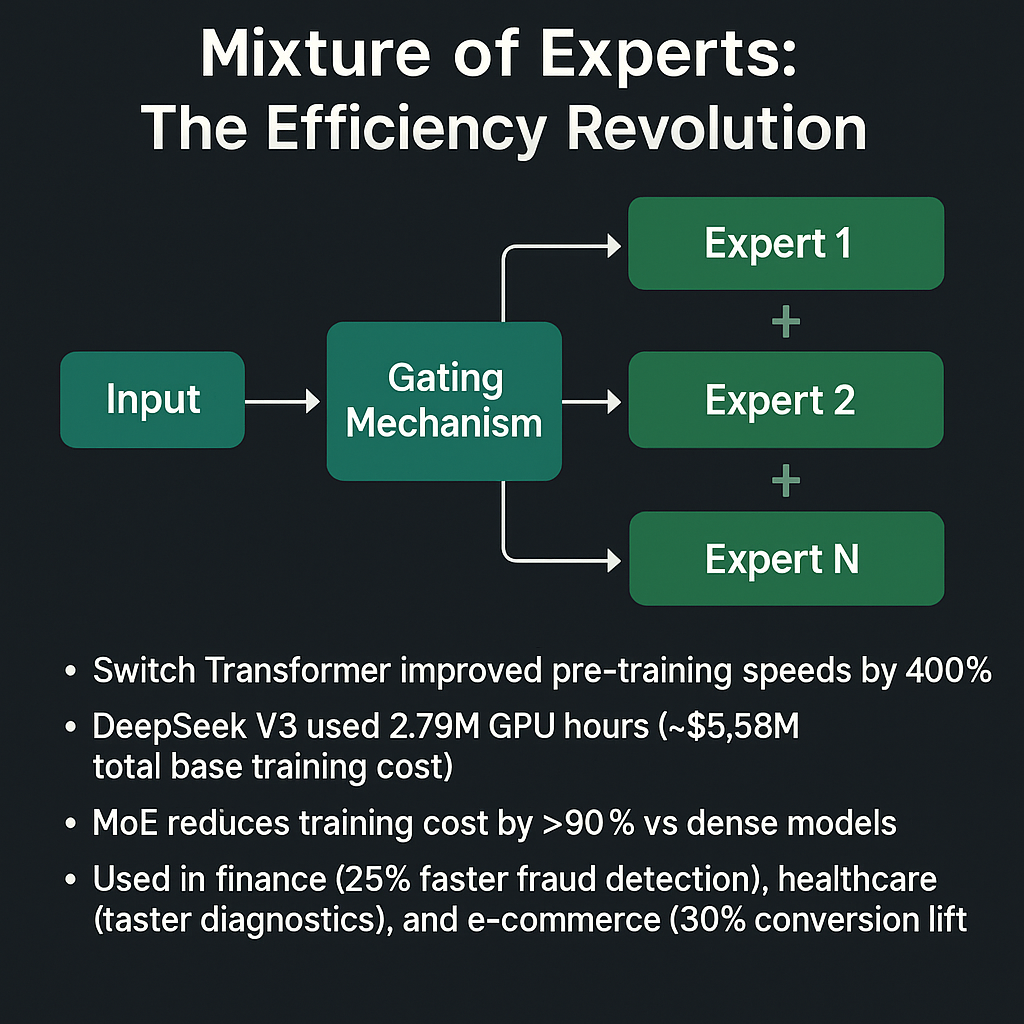

Mixture of Experts: The Efficiency Revolution

Perhaps no single algorithmic innovation has impacted AI economics more profoundly than Mixture of Experts (MoE) architectures. Traditional neural networks operate as dense models, activating all parameters for every input, making them computationally expensive at scale. MoE models revolutionize this approach by partitioning the network into multiple specialized “experts”—smaller sub-networks that each handle specific aspects of the problem space. A gating mechanism dynamically routes inputs to the most relevant experts for each task, meaning only a subset of the model’s total parameters activates for any given input.

The efficiency gains are remarkable. Google’s Switch Transformer, implementing MoE with top-1 routing where each input activates exactly one expert from a pool of 128, improved pre-training speeds by four hundred percent even when scaling to trillion-parameter models. This means organizations can train models with massive total capacity while keeping active computational requirements comparable to much smaller dense models.

DeepSeek’s V3 model exemplifies MoE economics in practice. The model uses 2.79 million GPU hours at an estimated cost of $5.58 million for the base training. While initial reports claimed even lower costs by focusing only on reinforcement learning fine-tuning (approximately three hundred thousand dollars), the complete picture—including base model training—still represents extraordinary cost efficiency compared to dense models of equivalent capability. DeepSeek’s architecture demonstrates that sophisticated MoE implementations can slash training costs by ninety percent or more compared to traditional dense approaches while maintaining or improving performance.

The technical advantages of MoE extend beyond raw cost reduction. These architectures excel at handling heterogeneous data where patterns and dependencies are complex and non-linear—precisely the characteristics of real-world datasets in natural language processing, computer vision, and multimodal learning. Financial institutions use MoE for fraud detection, improving detection speed by twenty-five percent while reducing energy consumption. Healthcare organizations deploy MoE for diagnostic models, achieving quicker and more accurate patient assessments. E-commerce platforms leverage MoE for personalized marketing, resulting in thirty percent boosts in conversion rates.

Lower-Precision Training and Quantization

Another algorithmic innovation substantially reducing training costs involves numerical precision optimization. Traditional neural network training used 32-bit floating-point precision (FP32) for calculations, providing high accuracy but requiring significant memory and computational resources. Modern approaches leverage lower-precision formats including FP16 (16-bit), BF16 (bfloat16), INT8 (8-bit integers), and even INT4 for certain operations.

The computational savings are substantial. Reducing from FP32 to FP16 approximately halves memory requirements and can double throughput on hardware with specialized low-precision cores. Moving to INT8 for inference provides further speedups while maintaining acceptable accuracy for many applications. Hardware manufacturers increasingly optimize their chips for mixed-precision training, where different parts of the computation use different precision levels based on numerical sensitivity.

NVIDIA’s Blackwell architecture, AMD’s MI400 series, and Google’s latest TPUs all feature enhanced support for multiple precision formats, enabling developers to optimize the cost-performance tradeoff for their specific use cases. These capabilities mean organizations can train larger models within the same hardware budget or train equivalent models at substantially lower cost.

Efficient Architectures and Training Techniques

Beyond MoE and precision optimization, numerous architectural and training innovations contribute to cost reduction. Efficient attention mechanisms reduce the quadratic computational complexity of standard transformer attention, enabling processing of much longer sequences at equivalent cost. Flash Attention and other optimized implementations achieve two to four times speedup in training and inference by better utilizing GPU memory hierarchy.

Knowledge distillation techniques allow smaller, more efficient “student” models to learn from larger “teacher” models, capturing much of the capability at a fraction of the computational cost. Organizations can deploy these distilled models for inference, dramatically reducing operational expenses while maintaining most of the performance benefits.

Curriculum learning strategies improve training efficiency by presenting examples in order from simple to complex, helping models learn more effectively with less data and fewer training steps. Parameter-efficient fine-tuning methods like LoRA (Low-Rank Adaptation) enable customization of large pre-trained models for specific tasks with minimal additional computation, opening possibilities for specialization without massive retraining costs.

Software Stack Maturation: From Research Code to Production Systems

The infrastructure supporting AI development has matured dramatically, contributing significantly to cost reductions through improved efficiency and developer productivity. Modern AI frameworks and tools enable organizations to leverage best practices and optimizations that would have required expert teams to implement just years ago.

Framework Evolution and Optimization

Deep learning frameworks have evolved from research prototypes into production-grade systems optimized for efficiency. PyTorch and TensorFlow, the dominant frameworks, have incorporated numerous optimizations including automatic mixed precision, efficient memory management, distributed training support, and hardware-specific optimizations. Holding hardware improvements constant, newer versions of these frameworks combined with novel training methods generate approximately eight times performance gain compared to earlier versions.

The ecosystem around these frameworks has expanded to provide ready-made solutions for common challenges. Hugging Face Transformers library offers pre-trained models and training pipelines that dramatically reduce the time and expertise required to develop state-of-the-art models. DeepSpeed from Microsoft provides system optimizations enabling trillion-parameter model training on limited hardware. FairScale from Meta offers tools for training at scale with reduced memory footprint.

Cloud Infrastructure and Managed Services

Cloud providers have developed sophisticated managed AI services that abstract away infrastructure complexity and enable organizations to focus on model development rather than system administration. These services provide auto-scaling, managed distributed training, hyperparameter optimization, and spot instance integration to reduce costs by leveraging unused capacity.

The broader GPU cloud market shows pricing ranging from thirty-two cents to sixteen dollars per GPU per hour across different hardware tiers and providers. Recent competitive pressure and supply increases have driven GPU hourly pricing down approximately fifteen percent, making experimentation more accessible. However, the more significant cost benefit comes from improved utilization efficiency. Organizations that previously achieved thirty to forty percent GPU utilization—wasting sixty to seventy percent of purchased compute—can now reach seventy percent or higher through better scheduling and resource management.

Dynamic resource allocation and workload orchestration systems enable organizations to maximize infrastructure utilization by automatically distributing work across available resources, pre-empting lower-priority jobs when urgent workloads arrive, and leveraging heterogeneous hardware efficiently.

System-Level Efficiencies: Beyond Individual Model Training

While much attention focuses on individual model training costs, system-level efficiencies increasingly dominate the economic equation for organizations deploying AI at scale.

Inference Cost Optimization

Training costs, though substantial, represent one-time expenses. Inference costs—serving predictions to users—persist indefinitely and often dominate total cost of ownership for deployed AI systems. The cost to run AI inference has dropped even more precipitously than training costs, in some cases collapsing to near zero for many use cases.

MoE architectures that activate only subsets of parameters provide enormous inference efficiency gains. A model with six hundred billion total parameters might activate only thirteen billion per request, reducing inference cost by approximately ninety-seven percent compared to activating all parameters. Hardware vendors increasingly optimize for inference workloads specifically, with specialized inference accelerators delivering superior price-performance compared to training-focused GPUs.

Chinese AI startup DeepSeek introduced discounted off-peak pricing for AI models, reducing costs by up to seventy-five percent during non-peak hours. This pricing strategy benefits developers integrating AI models during off-peak times covering daytime in Europe and the United States, demonstrating that operational innovations can deliver cost savings rivaling technical improvements.

Transfer Learning and Foundation Models

The rise of foundation models—large, general-purpose models pre-trained on diverse data—fundamentally alters AI economics. Rather than training specialized models from scratch for each task, organizations can fine-tune pre-trained foundation models with relatively modest computational budgets. A task that might require millions of dollars to train from scratch can often be addressed through fine-tuning costing thousands or even hundreds of dollars.

This economic shift democratizes AI development. Startups and research teams without massive computing budgets can leverage foundation models from OpenAI, Anthropic, Meta, or Google and customize them for specialized applications. Open-source foundation models like Meta’s Llama series particularly enable this dynamic, as organizations avoid API costs and maintain full control over deployment.

Collaborative Training and Resource Sharing

Emerging approaches to collaborative AI development further reduce costs by distributing them across multiple parties. Federated learning enables training on decentralized data without centralizing information, reducing data transfer costs and privacy risks while enabling multiple organizations to jointly improve models. Distributed training protocols allow organizations to pool computational resources, achieving economies of scale impossible individually. Compute cooperatives and shared infrastructure initiatives enable smaller organizations to access high-end training resources through collective investment.

The DeepSeek Phenomenon: Case Study in Cost Efficiency

DeepSeek’s 2025 releases—V3 and R1—exemplify the transformation in AI economics and sparked intense debate about training cost realities. Initial reports claimed DeepSeek trained its R1 model for approximately three hundred thousand dollars, a figure that seemed to represent revolutionary cost efficiency compared to the tens or hundreds of millions reportedly spent by Western AI labs.

However, deeper analysis reveals a more nuanced picture that nonetheless demonstrates substantial progress in cost reduction. The three-hundred-thousand-dollar figure referenced only the reinforcement learning fine-tuning phase applied to DeepSeek’s existing V3 base model, not the complete end-to-end training process. According to DeepSeek’s research disclosures, training the V3 base model required 2,048 H800 GPUs running for approximately two months, totaling 2.79 million GPU hours at an estimated cost of $5.58 million.

Adding the reinforcement learning phase brings total costs to approximately $5.87 million—still remarkably efficient compared to reported training costs exceeding $100 million for comparable Western models. DeepSeek’s efficiency stems from sophisticated MoE architecture design where 671 billion total parameters provide the capacity of a much larger model while only thirteen billion parameters activate per token during inference, hardware optimization through efficient use of China-manufactured H800 chips (export-restricted versions of NVIDIA’s H100), and algorithmic innovations in reinforcement learning from scratch using chain-of-thought reasoning approaches.

The DeepSeek example demonstrates that even accounting for complete training costs, modern approaches achieve performance comparable to models costing twenty to forty times more to train. This represents not accounting tricks but genuine efficiency improvements from architectural and algorithmic innovation.

The Democratization Effect: Who Benefits From Falling Costs

The plummeting costs of AI training are fundamentally reshaping who can participate in AI development and deployment, creating ripple effects across the technology ecosystem and broader economy.

Startup and SME Access

Perhaps the most immediate beneficiaries are startups and small-to-medium enterprises that previously lacked resources to develop custom AI capabilities. Training costs that required tens of millions of dollars and excluded all but the best-funded companies now fall within reach of seed-stage startups and established SMEs with modest technology budgets.

This accessibility explosion enables startups to compete on AI capability rather than infrastructure spending, develop vertical-specific models tailored to niche markets, and rapidly iterate and experiment without prohibitive financial risks. Following Jevons’ Paradox, this cost reduction will not dampen demand—it will explode it. We should expect AI everywhere, from kitchen appliances to transit systems, as economic barriers evaporate.

Academic Research and Open Science

Academic institutions and research organizations, typically operating with far smaller budgets than tech companies, benefit enormously from training cost reductions. Universities can conduct cutting-edge AI research without requiring corporate-scale funding, graduate students can pursue ambitious thesis projects involving substantial model training, and open science initiatives can develop and share foundation models for public benefit.

The rise of accessible, high-quality open-source models like Meta’s Llama series, Mistral’s various releases, and community-driven projects like BLOOM demonstrates this democratization in action. Researchers worldwide can build upon these foundations, accelerating scientific progress through collaborative improvement rather than redundant re-implementation.

Geographic Distribution of AI Development

Training cost reductions particularly impact AI development outside traditional tech hubs in Silicon Valley, Seattle, and Beijing. Organizations in regions with less developed AI ecosystems can compete more effectively when infrastructure advantages matter less, nations with limited computing resources can develop sovereign AI capabilities, and emerging markets can leapfrog to AI adoption without building extensive traditional IT infrastructure.

Governments increasingly recognize AI as strategic technology and invest in domestic compute capacity. Canada’s $2.4 billion AI investment program exemplifies national strategies to reduce dependency on foreign cloud providers while supporting domestic AI development through sovereign compute infrastructure. Such programs become more viable as the required scale of investment decreases with improving cost efficiency.

Environmental Considerations: The Sustainability Dimension

AI training’s environmental impact has attracted increasing scrutiny as model sizes and training runs have grown. The energy consumption of frontier AI development raised concerns about sustainability, with estimates suggesting GPT-4’s training consumed as much electricity as 180,000 US homes in a month. However, the narrative around AI’s environmental footprint requires nuance as cost efficiency improvements often correlate with energy efficiency.

Energy Efficiency Improvements

AI chip energy efficiency has improved by approximately forty percent year-over-year between 2020 and 2025, a remarkable achievement that transforms the environmental equation. Modern GPUs and specialized AI accelerators deliver substantially more computation per watt than previous generations, MoE architectures that activate only necessary parameters reduce active energy consumption proportionally, and advanced cooling and power management systems in modern data centers minimize overhead energy use.

Importantly for both economics and environment, electricity represents only two to six percent of total training costs for frontier models, far less than commonly assumed. The dominant expenses remain hardware amortization at forty to fifty percent and personnel costs at twenty to thirty percent of total budgets. This means that even substantial energy price increases would have modest impact on training economics, while efficiency improvements that reduce hardware or personnel requirements deliver far greater cost benefits.

Sustainable AI Development Practices

The AI industry increasingly adopts sustainable development practices driven by both environmental consciousness and economic incentive. Organizations schedule intensive training runs during periods of renewable energy availability, utilize waste heat from data centers for district heating or industrial processes, optimize model architectures for efficiency rather than pure capability maximization, and implement lifecycle assessments considering environmental costs alongside traditional metrics.

These practices align environmental and economic objectives—the same architectural innovations that reduce training costs typically also reduce energy consumption, creating positive reinforcement for sustainable development.

Future Trajectories: Where AI Economics Are Headed

Understanding current cost trends provides insight into probable future developments that will shape AI’s role in society and economy.

Continued Cost Decline vs. Capability Scaling

Two competing forces will determine future cost trajectories. On one hand, continued improvements in hardware efficiency, algorithmic innovation, and system optimization suggest further substantial cost reductions for equivalent capabilities. Analysts project that costs for training equivalent to today’s frontier models could fall another order of magnitude by 2030.

On the other hand, competitive pressure to build more capable models drives continued scaling. If organizations channel cost savings into larger models rather than maintaining capability levels at lower costs, total training expenses may continue rising even as per-unit costs decline. The exponential growth in training compute for frontier models over the past decade—doubling approximately every six months—suggests this scaling pressure remains intense.

The likely outcome involves bifurcation. Frontier development by a handful of organizations will continue pushing capability boundaries with massive training runs approaching or exceeding one billion dollars by 2027. Simultaneously, the vast majority of AI applications will leverage increasingly efficient, specialized models trained at costs of thousands to millions of dollars—a democratization of capability that was economically impossible just years ago.

Inference Economics and Real-Time AI

As training costs decline, inference costs increasingly dominate total cost of ownership for deployed AI systems. The next phase of economic optimization will focus on serving predictions efficiently at scale. Specialized inference accelerators, quantization techniques reducing model precision for deployment, caching and compute reuse strategies, and edge deployment moving computation closer to users will all contribute to dramatic inference cost reductions.

The cost of running trained neural networks in production has already collapsed to near zero for many use cases, enabling AI integration into products and services where even modest per-query costs would prohibit deployment. This trend will accelerate, making AI capabilities ubiquitous across digital and increasingly physical products.

Multimodal and Embodied AI

Future cost reductions will particularly benefit multimodal AI systems that integrate text, images, audio, and video understanding. These systems typically require more diverse and expensive training data plus greater computational resources compared to single-modality models. As costs decline, developing sophisticated multimodal capabilities becomes economically viable for broader range of applications.

Similarly, embodied AI—systems operating in physical environments through robots or smart devices—will benefit from cost reductions enabling continuous learning and adaptation in deployed systems. Rather than training once and deploying static models, future systems may refine capabilities based on real-world experience, approaching true learning agents.

Strategic Implications for Organizations

The transformation in AI training economics creates both opportunities and imperatives for organizations across sectors.

Build vs. Buy Decisions

Falling training costs shift optimal strategies for AI capability development. Previously, most organizations defaulted to consuming AI through APIs and cloud services, lacking resources for custom model development. With training costs declining, more organizations should evaluate building custom models tailored to their specific needs, data, and use cases. The calculus changes when training a specialized model costs tens of thousands rather than tens of millions of dollars.

However, “build” doesn’t necessarily mean training from scratch. The optimal approach often involves fine-tuning existing foundation models, combining pre-trained capabilities with organization-specific data and requirements. This hybrid approach captures benefits of both general and specialized knowledge while minimizing training costs.

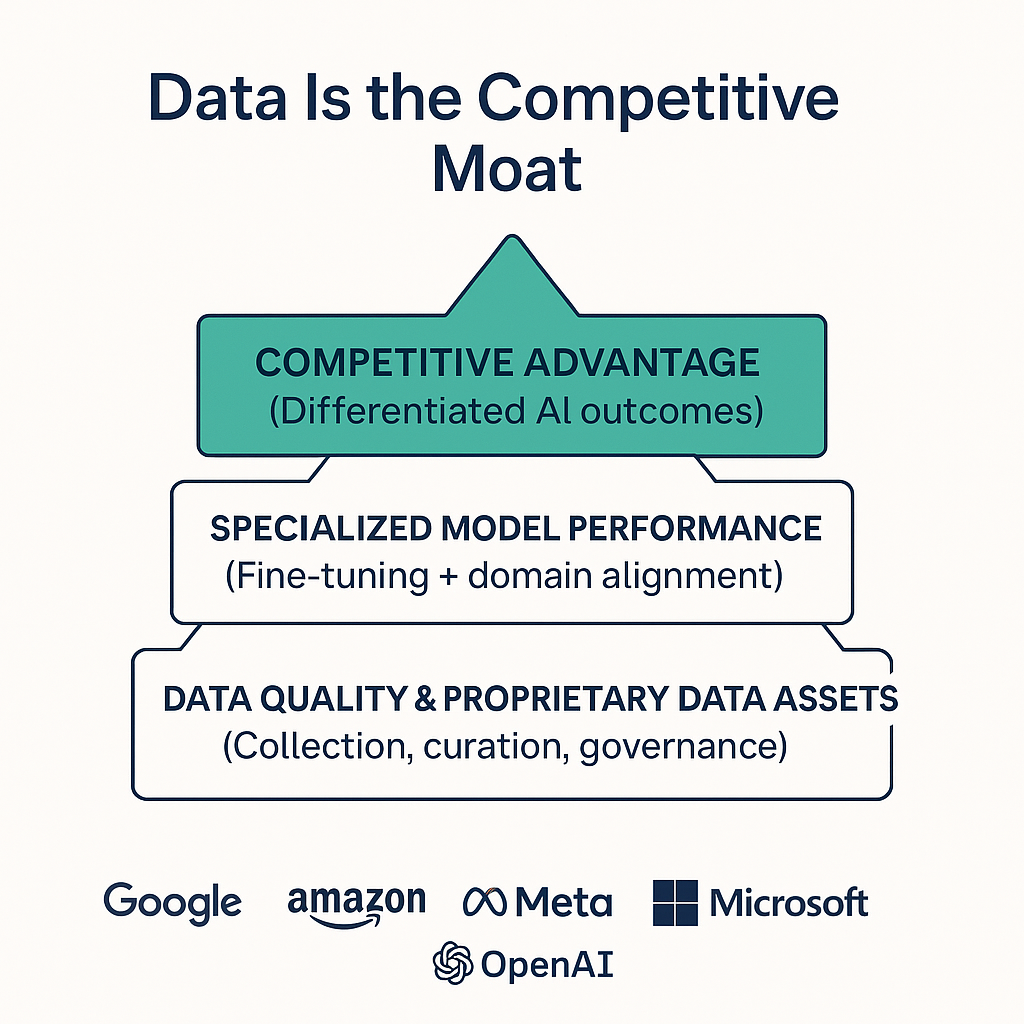

Data as Strategic Asset

As training costs decline, high-quality training data becomes the primary differentiator and constraint. Organizations with unique, valuable datasets gain disproportionate advantages in developing AI capabilities that competitors cannot easily replicate. Strategic focus should shift from minimizing training costs—increasingly commodity—toward maximizing data quality, diversity, and proprietary value.

This dynamic elevates data strategy to core competitive importance. Organizations must implement robust data collection and curation processes, establish data governance ensuring quality and compliance, create mechanisms to continuously improve data assets, and protect proprietary data from competitors while leveraging external data sources appropriately.

Talent and Organizational Capability

While hardware and algorithms become more accessible, deep expertise remains scarce and valuable. Organizations need talent who can navigate the expanding landscape of models and tools, optimize for specific use cases and constraints, and integrate AI capabilities into products and operations effectively. The democratization of AI paradoxically increases rather than decreases the value of skilled practitioners who can leverage available tools productively.

Organizations should invest in building internal AI capabilities through hiring, training existing staff, and fostering culture of experimentation and learning. The reduced costs of experimentation enable learning by doing at much lower financial risk than previously possible.

Challenges and Limitations

Despite remarkable progress, significant challenges remain in AI development economics that may slow or complicate the continuing cost decline trajectory.

Hardware Supply Constraints

Even as individual GPU costs decline in per-unit performance, absolute demand growth has created persistent supply constraints. NVIDIA’s production capacity struggles to keep pace with exploding demand for H100 and newer chips. Cloud providers face allocation challenges, with major customers securing capacity months in advance. Startups and smaller organizations often cannot obtain adequate GPU resources even at premium prices.

These supply constraints may persist until either manufacturing capacity expands substantially or demand moderates as the current wave of AI investment stabilizes. Organizations face strategic decisions about vertical integration into hardware or forming partnerships securing long-term access to compute resources.

Software Ecosystem Maturity

While frameworks and tools have improved dramatically, significant expertise still required for optimal results. Different architectures, training techniques, and deployment strategies involve complex tradeoffs that require sophisticated understanding to navigate effectively. The best practices for efficient training continue evolving rapidly, meaning knowledge from even two years ago may be outdated.

Organizations lacking deep internal expertise may struggle to achieve cost efficiency levels reached by sophisticated teams, despite nominally having access to the same tools and hardware. This expertise gap creates consulting and services opportunities but also means cost reductions accrue disproportionately to organizations with strong technical capabilities.

Reproducibility and Benchmark Gaming

As cost efficiency becomes competitive differentiator, organizations face incentives to report results in most favorable light possible, sometimes obscuring actual costs through selective disclosure, optimized reporting, and benchmark gaming where systems tune for specific tests rather than general capability.

The AI community must develop more rigorous standards for cost reporting that capture total cost of ownership including preliminary experiments and failures, amortized infrastructure investments, data acquisition and preparation expenses, and personnel time. Without such standards, claimed cost figures may mislead rather than inform strategic decision-making.

Conclusion: A New Economic Foundation for AI

The dramatic reduction in AI training costs represents one of the most significant economic shifts in modern technology. From training costs improving fifty times faster than Moore’s Law to MoE architectures reducing expenses by ninety percent, from GPU hourly rates declining fifteen percent to custom silicon fundamentally restructuring the chip market, the convergence of hardware innovation, algorithmic breakthroughs, and systems optimization has created a step-function change in who can develop and deploy sophisticated AI capabilities.

This transformation extends far beyond simple cost accounting. It fundamentally democratizes AI development, enabling startups, academic researchers, and organizations in emerging markets to compete with tech giants in developing cutting-edge capabilities. It shifts competitive dynamics from infrastructure spending to data quality, algorithmic innovation, and application design. It accelerates the pace of AI integration across economy and society by removing economic barriers that previously confined AI to narrow applications justifying massive investment.

The implications ripple across industries, geographies, and organizational types. Businesses must reassess build-vs-buy decisions as custom model development becomes economically viable. Governments must reconsider AI strategy as sovereign capability becomes more accessible. Researchers can pursue ambitious projects previously requiring corporate resources. Society must grapple with AI’s expanding role as economic constraints that tempered deployment evaporate.

Looking forward, the trajectory appears clear even if details remain uncertain. AI training costs will likely continue declining substantially for equivalent capabilities even as frontier development pushes into expensive new territory. The gap between cutting-edge research and practical deployment will narrow as yesterday’s innovations become today’s commodity capabilities. The number of organizations and individuals capable of meaningful AI development will expand by orders of magnitude.

This democratization brings both tremendous opportunity and significant responsibility. As barriers fall, organizations of all sizes can harness AI to solve problems, enhance products, and create value in ways previously impossible. Yet this same accessibility demands thoughtful governance, ethical consideration, and sustainable practices ensuring that AI development serves broad social benefit rather than narrow interests.

The economics of AI are being rewritten faster than anyone predicted just a few years ago. For those who move quickly to harness more efficient models and training approaches, this creates extraordinary strategic advantages in cost structure and capability. The AI revolution is accelerating, and its economics increasingly favor the nimble, the knowledgeable, and the bold who recognize that the barrier to entry has fundamentally transformed. The question is no longer whether organizations can afford to develop AI capabilities, but whether they can afford not to.

Sources Referenced

- About Chromebooks. (2025). “Machine Learning Model Training Cost Statistics [2025].” Industry Analysis. https://www.aboutchromebooks.com/machine-learning-model-training-cost-statistics/

- Tomasz Tunguz. (2025). “The AI Cost Curve Just Collapsed Again.” Technology Blog. https://tomtunguz.com/deepseek-sputnik/

- Epoch AI. (2023). “Trends in the dollar training cost of machine learning systems.” Research Analysis. https://epoch.ai/blog/trends-in-the-dollar-training-cost-of-machine-learning-systems

- Medium – Carmen Li. (2025). “The Evolution of GPU Pricing: A Deep Dive into Cost per FP32 FLOP for Hyperscalers.” Market Analysis. https://medium.com/@cli_87015/the-evolution-of-gpu-pricing-a-deep-dive-into-cost-per-fp32-flop-for-hyperscalers-cbf072b85bb5

- American Enterprise Institute. (2022). “Chart of the Day: Declining AI Training Costs.” Economic Research. https://www.aei.org/economics/chart-of-the-day-declining-ai-training-costs/

- Powerdrill AI. “AI Training Economics: Compute Costs, Resource Efficiency, and Global Competition in 2025.” Data Analysis Report. https://powerdrill.ai/blog/data-facts-of-notable-ai-models-and-their-development-characteristics

- Adyog Blog. (2025). “The Economics of AI Training and Inference: How DeepSeek Broke the Cost Curve.” Technical Analysis. https://blog.adyog.com/2025/02/09/the-economics-of-ai-training-and-inference-how-deepseek-broke-the-cost-curve/

- ARK Invest. “AI Training Costs Are Improving at 50x the Speed of Moore’s Law.” Investment Research. https://www.ark-invest.com/articles/analyst-research/ai-training

- CloudZero. (2025). “AI Costs In 2025: A Guide To Pricing, Implementation, And Mistakes To Avoid.” Enterprise Guide. https://www.cloudzero.com/blog/ai-costs/

- The Register. (2025). “DeepSeek didn’t really train its flagship model for $294,000.” Technology Investigation. https://www.theregister.com/2025/09/19/deepseek_cost_train/

- Modular. “How Mixture of Experts Models Enhance Machine Learning Efficiency.” Technical Documentation. https://www.modular.com/ai-resources/how-mixture-of-experts-models-enhance-machine-learning-efficiency

- Preprints.org. (2025). “Improving Deep Learning Performance with Mixture of Experts and Sparse Activation.” Research Paper. https://www.preprints.org/manuscript/202503.0611/v1

- arXiv. (2025). “A Survey on Inference Optimization Techniques for Mixture of Experts Models.” Paper 2412.14219v2. https://arxiv.org/html/2412.14219v2

- IBM Think. (2025). “What is mixture of experts?” Technical Explainer. https://www.ibm.com/think/topics/mixture-of-experts

- arXiv. (2025). “A Comprehensive Survey of Mixture-of-Experts: Algorithms, Theory, and Applications.” Paper 2503.07137. https://arxiv.org/html/2503.07137v1

- Deepfa. “Mixture of Experts (MoE) – The Efficiency Revolution in Large Language Model Architecture.” Technical Guide. https://deepfa.ir/en/blog/mixture-of-experts-moe-architecture-guide

- 4idiotz. (2025). “DeepSeek-V4 2025 parameter count and architecture.” Technical Analysis. https://4idiotz.com/tech/artificial-intelligence/deepseek-v4-2025-parameter-count-and-architecture/

- arXiv. (2025). “A Comprehensive Survey of Mixture-of-Experts: Algorithms, Theory, and Applications.” Paper 2503.07137. https://arxiv.org/abs/2503.07137

- Adyog Blog. (2025). “How Mixture of Experts (MoE) and Memory-Efficient Attention (MEA) Are Changing AI.” https://blog.adyog.com/2025/02/09/how-mixture-of-experts-moe-and-memory-efficient-attention-mea-are-changing-ai/

- HAL Science. (2025). “A Survey of Mixture of Experts Models: Architectures and Applications.” Research Paper. https://hal.science/hal-05113196v1/file/20250527153855_FEI-2025-3-006.1.pdf

- AI Multiple. “Top 20+ AI Chip Makers: NVIDIA & Its Competitors.” Industry Report. https://research.aimultiple.com/ai-chip-makers/

- PatentPC. (2025). “The AI Chip Market Explosion: Key Stats on Nvidia, AMD, and Intel’s AI Dominance.” Market Analysis. https://patentpc.com/blog/the-ai-chip-market-explosion-key-stats-on-nvidia-amd-and-intels-ai-dominance

- SQ Magazine. (2025). “AI Chip Statistics 2025: Funding, Startups & Industry Giants.” Market Research. https://sqmagazine.co.uk/ai-chip-statistics/

- PatentPC. (2025). “AI Chips in 2020-2030: How Nvidia, AMD, and Google Are Dominating (Key Stats).” Historical Analysis. https://patentpc.com/blog/ai-chips-in-2020-2030-how-nvidia-amd-and-google-are-dominating-key-stats

- PenBrief. (2025). “The AMD AI chip challenge Nvidia 2025: Reshaping the AI Hardware Landscape.” Competitive Analysis. https://www.penbrief.com/amd-nvidia-ai-chip-2025/

- Francesca Tabor. (2025). “Top 20 AI Chip Makers: NVIDIA & Its Competitors in 2025.” Industry Overview. https://www.francescatabor.com/articles/2025/2/24/top-20-ai-chip-makers-nvidia-amp-its-competitors-in-2025

- Vertu. (2025). “10 Leading AI Hardware Companies Shaping 2025.” Technology Report. https://vertu.com/ai-tools/top-ai-hardware-companies-2025/

- PatentPC. (2025). “The AI Chip Boom: Market Growth and Demand for GPUs & NPUs (Latest Data).” Market Analysis. https://patentpc.com/blog/the-ai-chip-boom-market-growth-and-demand-for-gpus-npus-latest-data

- Yahoo Finance. (2025). “Nvidia’s Big Tech customers might also be its biggest competitive threat.” Business Analysis. https://finance.yahoo.com/news/nvidias-big-tech-customers-might-also-be-its-biggest-competitive-threat-153032596.html

- Skywork AI. “The AI Chip War: NVIDIA vs. AMD’s Latest Battle Situation.” Industry Report. https://skywork.ai/skypage/en/The-AI-Chip-War:-NVIDIA-vs.-AMD’s-Latest-Battle-Situation/1950027542372126720